Understanding Natural Language Input as a UX Designer

There are clear technical and behavioral reasons that explain the shift to word-based interfaces, and UX designers need to understand them to catch up.

[ 6 mins read | 7 mins listen ]

TL;DR - Natural language became the standard interface for generative AI because it works well with how models are trained and how humans behave. It lowers the barrier to entry, speeds up iteration, and works across devices and languages. For designers, this shift means new design patterns, new validation methods, and technical literacy in AI models.

Don't want to read? Listen to this article:

Words as the New User Interface

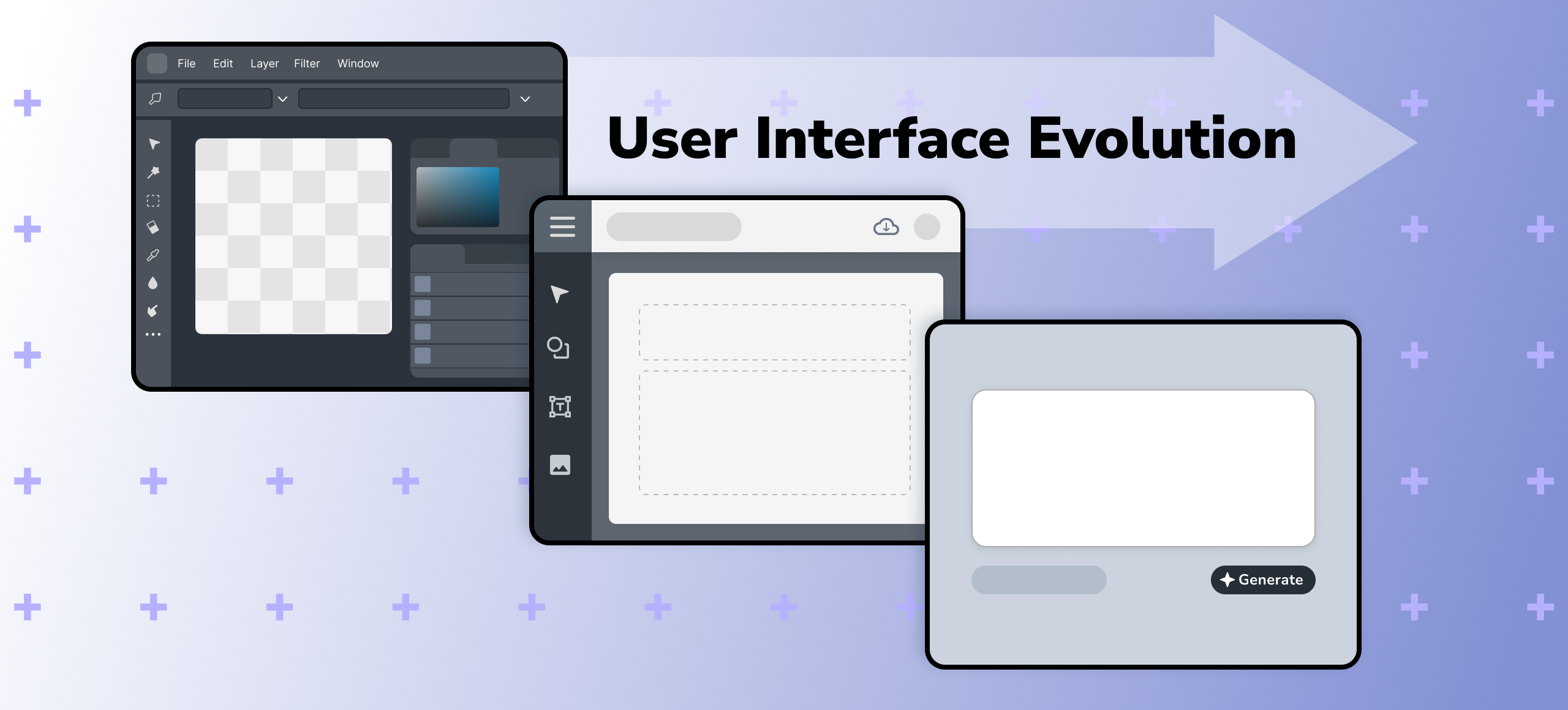

Generative AI tools have fundamentally changed the way we interact with interfaces. Instead of a blank workspace cluttered with toolbars, we get a simple text box.

Part of why generative AI tools are seeing such rapid and widespread adoption is the extremely low barrier to entry. Despite the complicated technology, anyone can start using these tools through natural language—something every human being is already familiar with. For applications with voice input, creating something is as easy as speaking it out loud.

Why GenAI Defaults to Natural Language Input

There are both technical and behavioral reasons why every GenAI tool out there suddenly adopted text inputs

⚙️ The Technical Explanation

Training-data alignment

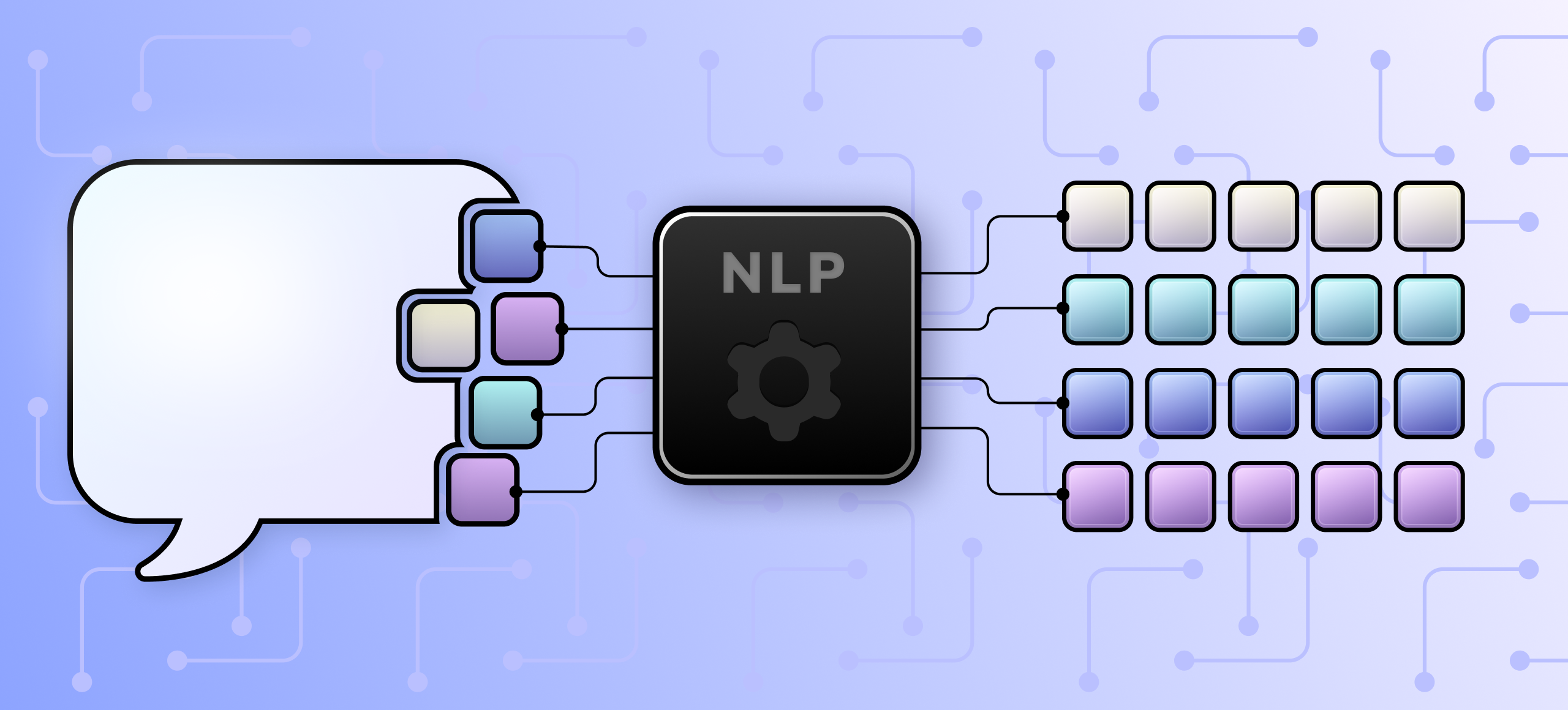

Most generative models are trained on text. LLMs (Large Language Models, like ChatGPT) use compressed text from the internet, while image generators are trained on labeled image-text pairs. These models break language into small pieces called tokens and process those to generate relevant content. The most direct and reliable way to interact with them is through the same format they were trained on: natural language.

Universal hardware

Language-based input doesn’t require new hardware. Keyboards and microphones have been part of modern devices and daily life for decades. For most mainstream GenAI tools, the real power comes from the server side, where large models handle the complexity. This makes it compatible with existing infrastructure, even on low-end devices, as long as processing happens remotely.

Multi-lingual reach

Localization and global scaling are achievable with minimal cost. Multilingual datasets are already common in model training, and modern translation tools allow a single model to support various languages without re-training from scratch.

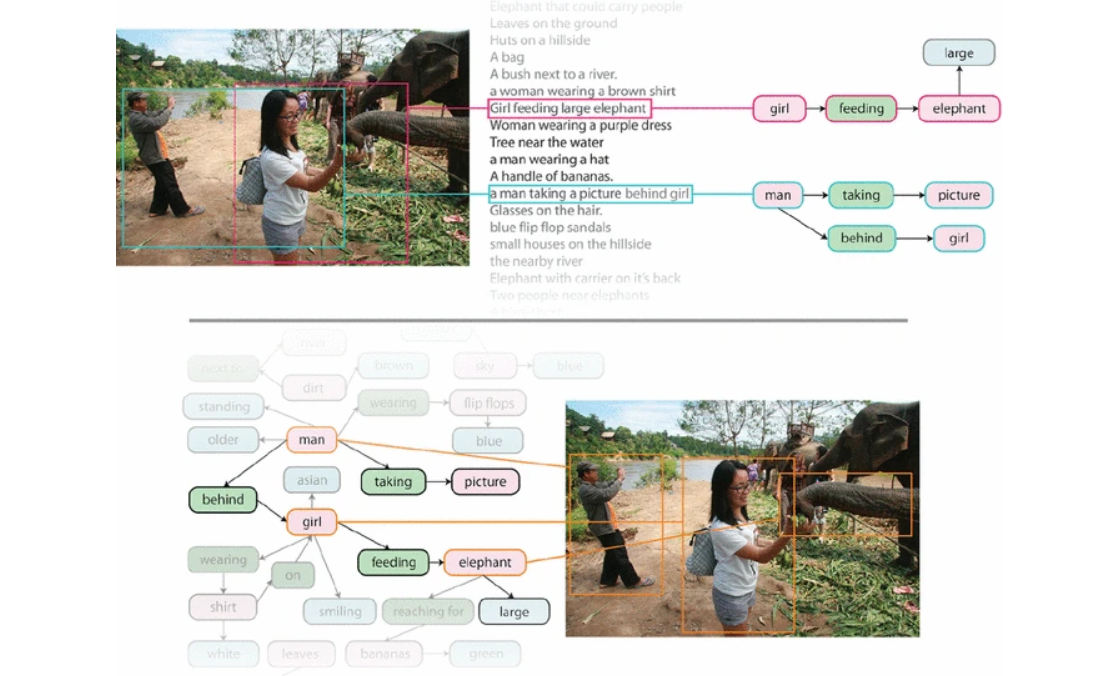

This example from the Visual Genome dataset shows how natural language works in image training. Each word connects to a part of the image and to other related words. This shows how AI learns to associate texts with images.

🧠 The Behavioral Explanation

Low cognitive load

The learning curve is much lower than with other input methods. There is no mental burden in learning or remembering what each menu item does.

The work process also requires less overhead mental effort. Unlike traditional creation tools that often require users to think in sequential steps before executing the commands, with natural language input, users can type or speak naturally as they form their intent.

This has significantly changed our thought process when using tools like ChatGPT for writing, or Co-pilot for coding.High semantic, faster iterative loop

A short sentence can express intent, tone, and stylistic nuance that would otherwise require multiple dropdowns or sliders. This lets users reach a result in fewer steps, especially after they learn how to use the right keywords.

When the result isn’t as expected, the effort and time to retry are fairly low. The fast feedback loop and low cost (or free, in many cases) nudge users to experiment more naturally.

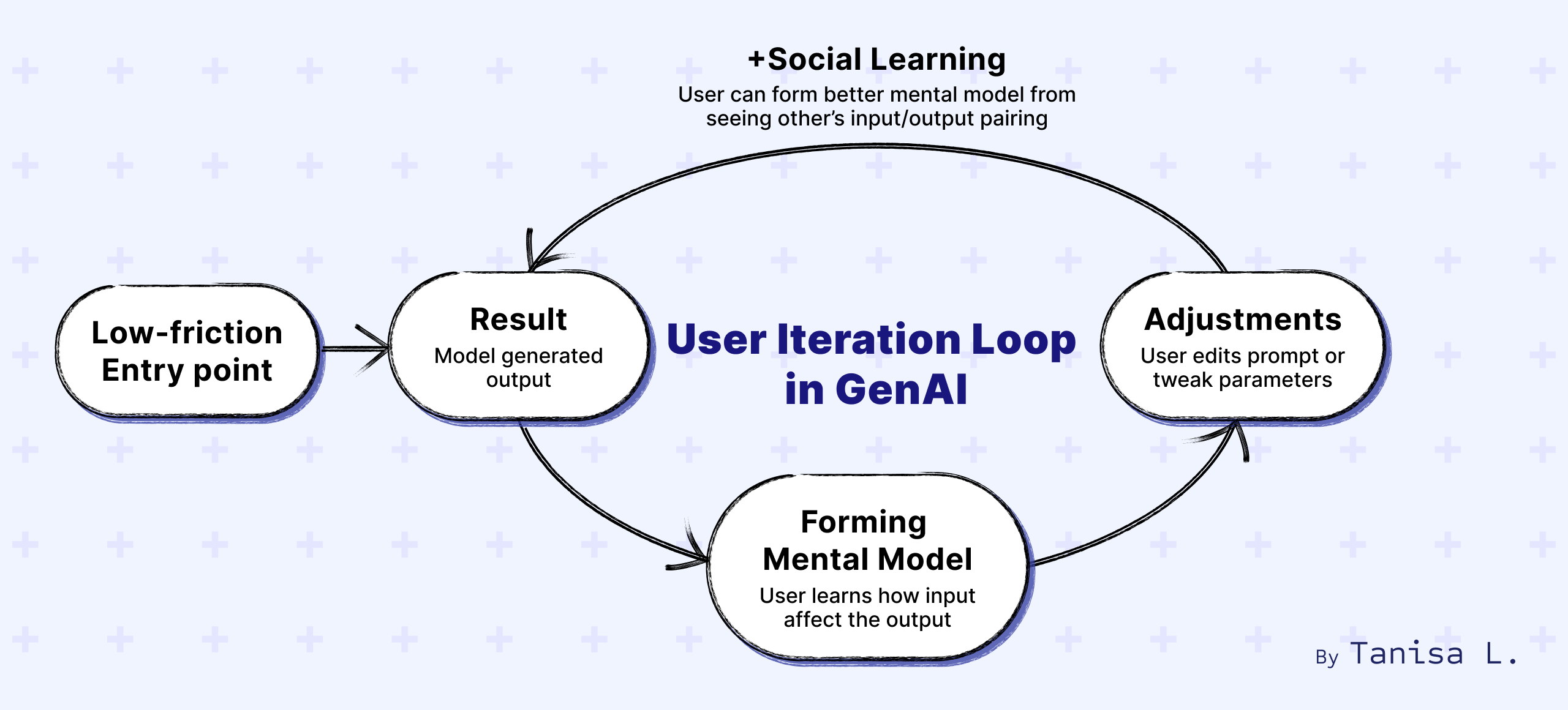

Shareable prompts & social learning

Prompts are just text. That's it. It's the simplest form of content that made sharing extremely easy. No technical knowledge is required for anyone to use copy and paste prompts to get results. Users and content creators can exchange optimized prompts and quickly build on each other's prompt engineering techniques, which accelerates the learning process.

Platforms like Midjourney’s Discord have created a very unique prompting community that grows with the technology. Its largest public server has nearly 21 million members with 1-2 million daily active users. Every generated image and its prompt appear publicly. There are millions of examples for new users to see right away when they join.

Even incorrect outputs like Gemini or ChatGPT hallucinating odd answers contribute to the community growth. When people share these results, they serve as viral micro-learning moments.

Here’s what a typical user flow looks like when interacting with a GenAI tool. The behavior forms a loop, and multiple loops are expected within the same session. Each loop is short and relatively low effort. The mental model (how the user thinks the system works) should become stronger after each loop.

What Does This Mean to UX Designers?

Natural language interfaces are a result of an alignment between human behavior and technical capabilities, and it is here to stay.

Designers need to move beyond traditional visual UI patterns toward open-ended, language-based interaction designs.

🧩 New design patterns

Language-based input, coupled with somewhat unpredictable results, creates new types of behavior and expectations. Human-AI interaction designers can’t rely solely on traditional UX methodology to deliver smooth user experiences. Read more about natural language input pitfalls, and the design patterns to avoid them in my other article: Natural Language: The Best & Worst UI We've Got.

🎲 Exploratory trial-and-error as the default workflow

If we set aside assistants like Siri or Alexa, tools with simple and relatively fixed input and functions, there is no longer a single ‘happy path’ in the traditional sense.

In many tools, especially GenAI platforms, users are expected to treat prompting as quick iteration loops, adding small adjustments until they find the right output. The first result is rarely the final one. The interface is now a space for constant iteration.

Designers must empower the users to keep track of the input and output of each attempt. This means reversibility, reproduction ability, and visible history are core to the experience.

🔁 Social remixing as part of the experience

Prompting has become a unique activity in its own category. People learn by seeing how others engineer their prompt and iterate on those. Designers should consider how to promote that behavior within the platform to accommodate and retain users.

📊 Evolving validation methods

You can’t A/B test a conversation like you test a button or color change. We need new ways to understand conversational flow, users' mental models toward text-based interfaces, and emergent user behaviors.

⚙️ AI technical knowledge as a requirement

Designers should understand basic model capabilities, limitations, and behaviors to design effective experiences. Building an AI tool without that knowledge is like designing a website without knowing HTML or CSS. You can still do it, but technical knowledge is often what separates a typical designer from a good one.

Final Thought

We might be witnessing the biggest shift in human-computer interaction since the graphical user interface. The designers who master language-first interfaces today will help shape the future of user experience.

Glossary

- LLM (Large Language Model): A type of AI model trained on large amounts of text to generate human-like responses.

- GenAI (Generative AI): A type of artificial intelligence that can produce content, such as text, images, audio, or code, based on patterns learned from large datasets.

- Prompt Engineering: The skill of writing effective inputs to guide AI-generated outputs.

- Training Dataset: The large body of text, code, images, or other data used to teach a generative model.

- Mental Model: In UX terms, a user's internal understanding of how a system works based on their interactions with it.

References

- Krishna, R., et al. "Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations." International Journal of Computer Vision, 2017. Link

- Mahdavi Goloujeh, A., Sullivan, A., & Magerko, B. "The Social Construction of Generative AI Prompts." CHI Conference on Human Factors in Computing Systems, 2024. Link

- "Midjourney Statistics." Moxby Blog, 2025. Link