Natural Language: The Best & Worst UI We've Got

Natural Language is powerful but not without flaws. Here are the reasons and how to avoid those pitfalls as Human-centered AI designers.

[ 10 mins read | 13 mins listen ]

TL;DR - Natural language is driving the next interface revolution, but it creates unpredictability because AI lacks common ground with users and operates as a black box. Getting reliable results becomes a challenge. Applying four design patterns to make generative AI more efficient and usable: Output Alignment, Pre-Defined Boundaries, Active Memory, and Contextual Real-time Suggestions.

Don't want to read? Listen to this article:

This article builds on the core idea from Stanford professor Maneesh Agrawala’s Unpredictable Black Boxes Are Terrible UX, an updated version of his 2022 HAI talk. This piece expands on the original idea and also covers how natural language input disrupted traditional UI designs, and what new design patterns UX and AI designers need to know to catch up with today’s AI technology.

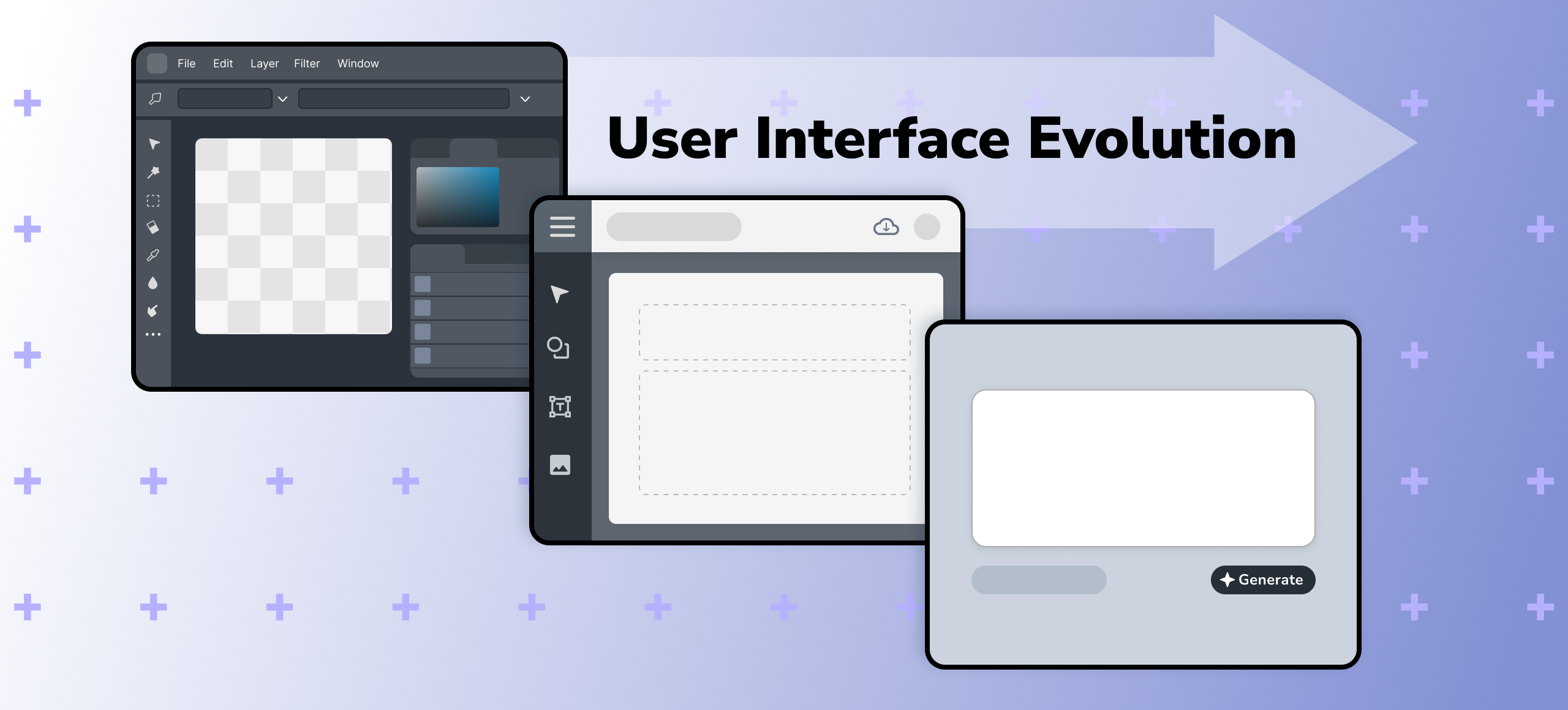

The Interface Revolution

In my previous article, Understanding Natural Language Input as a UX Designer, I explored why natural language became the default way to interact with generative AI like LLMs and image generators. I also talked about how this shift forces UX designers to understand new user behaviors and rethink their validation methods.

I'm convinced we're witnessing the most significant cognitive shift in human-computer interaction since the GUI revolutionized our digital spaces. Other revolutionary technologies like touchscreens changed how we interact, but natural language changes how we conceptualize and plan.

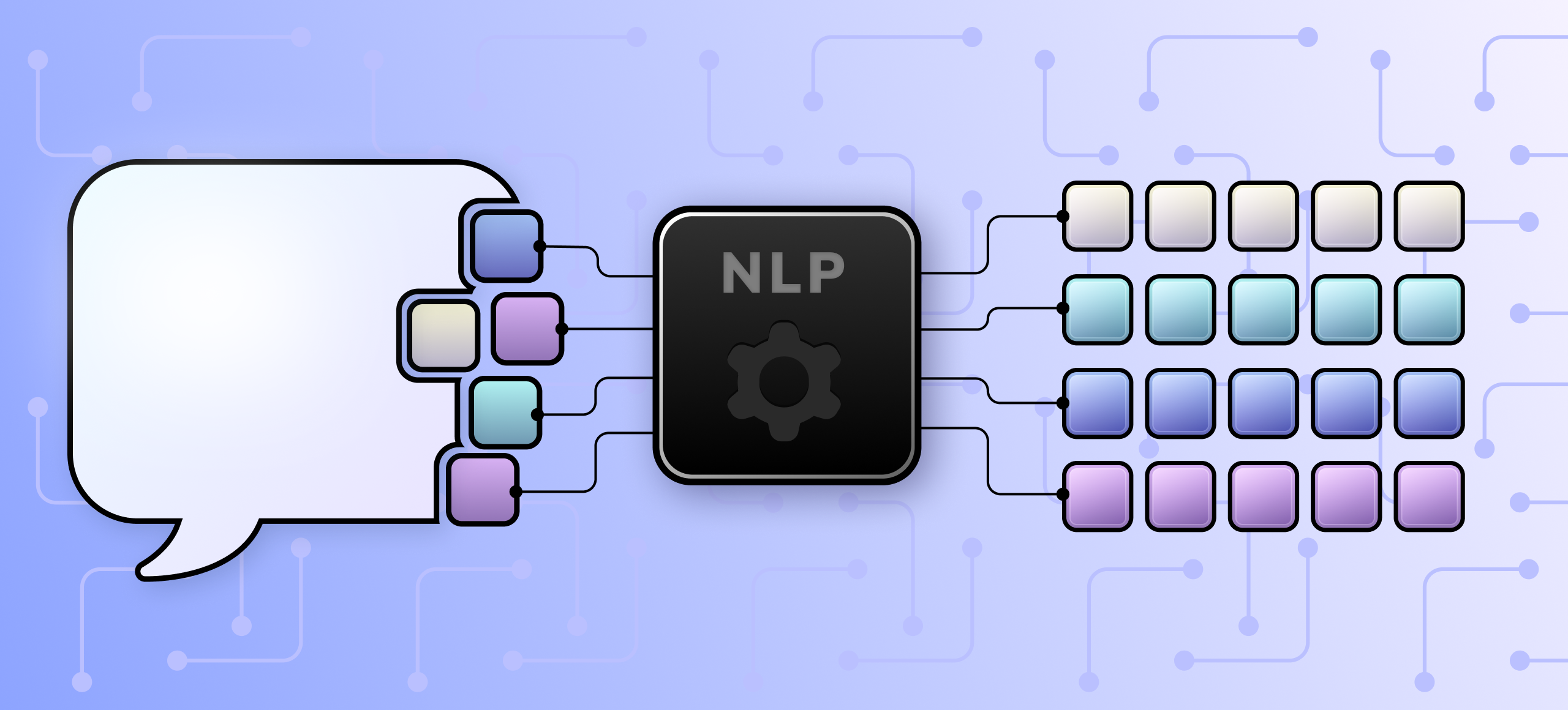

NLP technology has advanced to the point where the masses are now comfortable with conversational interfaces, and they're only getting better. Just like the GUI revolution, natural language input has not only introduced a new way we interact with computers but also how we think and approach digital workflows.

Following this familiar pattern of interface evolution, natural language offers a new frontier of accessibility and expands the possibilities in human-computer interaction. But when used without proper design considerations, it can create some of the worst user experiences.

Before we dive into why natural language can create terrible UX, we need to understand communicative language.

How Communicative Language Works

Let’s get philosophical for a bit here.

If I say the word "plant," what's the first thing that comes to mind? In most cases, you probably think of leafy vegetation or potted plants—or you might think of the action of planting a tree.

When it stands alone, this word can mean multiple things. Beyond those examples, it could also refer to a manufacturing plant, a power plant, or the action of planting fake evidence to frame someone at a crime scene.

Context is crucial for communicating information and ideas.

In high-context languages like Japanese, Chinese, or Arabic, the meaning of a phrase can be completely lost without context.

Here's where it gets more complicated: context alone isn't even enough.

Natural language also depends heavily on common ground.

The concept of common ground is what psycholinguists Clark & Schaefer (1989) described as the shared knowledge, beliefs, and assumptions that make communication work.

So, even if you know I'm talking about leafy green plants, you still wouldn’t know exactly which kind I had in mind. I can only hope that you've seen the plants I've seen, and that our concepts of plants are close enough.

If you and I grew up in the same area, we would likely have enough shared common ground that when you say ‘I’m thinking of getting some plants,' you can expect me to understand without explaining further.

But what if you're not talking to me, but to a computer instead? How much common ground does a computer share with you?

With Clear Intent, Unpredictability Is Friction

With no shared context or common ground with an AI model, users must form a new mental model from zero. Several rounds of trial and error are usually required to see a pattern—how the model interprets each word and phrasing. And even after that, a consistent pattern is not guaranteed.

Good UX is often predictable. An empty text box is not predictable and can be overwhelming to a user. When users can't build a clear mental model of how the product works, they frequently disengage.

When the output feels random, the tool doesn't feel usable, no matter how advanced the model behind it.

This predictability problem isn't unique to AI—it's a fundamental interface design principle that has now become prominent with natural language input.

Predictability is required for users to interact with a system effectively to get the results they aim for. A good system provides consistency, cues, and feedback for users to quickly form a mental model.

When users fail to predict, they are forced into trial-and-error cycles.

Think of a hotel shower with unlabeled faucets. There is no way to tell which direction to turn for hot water. And maybe the boiler has a delay. Maybe the pressure has to be just right. Getting a consistent, comfortable flow becomes a surprisingly challenging task. It would require you to turn the faucet, wait, feel the water, adjust, and repeat this frustrating process just to take a shower with the right water temperature.

The same fundamental UX problem plays out with natural language in generative AI.

Generative AI systems are often described as "black boxes"—models whose internal workings are not transparent or easily understood by humans.

As Stanford professor Maneesh Agrawala puts it, these black boxes "do not provide users with a predictive conceptual model. It is unclear how the AI converts an input natural language prompt into the output result." Even the designers of these systems usually can't explain the conversion process in ways that would help users build reliable mental models.

Worse, we humans often anthropomorphize AI systems, applying human-based mental models that are fundamentally mismatched to how these systems actually work. This reinforces the incorrect belief that AI ‘thinks’ like humans do, when "it is almost certainly the case that AI does not understand or reason about anything the way a human does", as Professor Agrawala notes.

Design Patterns to Improve GenAI Experience

We understand the challenges. Let's talk about how we might tackle them. From what I have observed the evolution of generative AI so far, I have spotted some patterns in the interfaces of major GenAI tools. Here are four patterns to consider when designing with natural language interfaces:

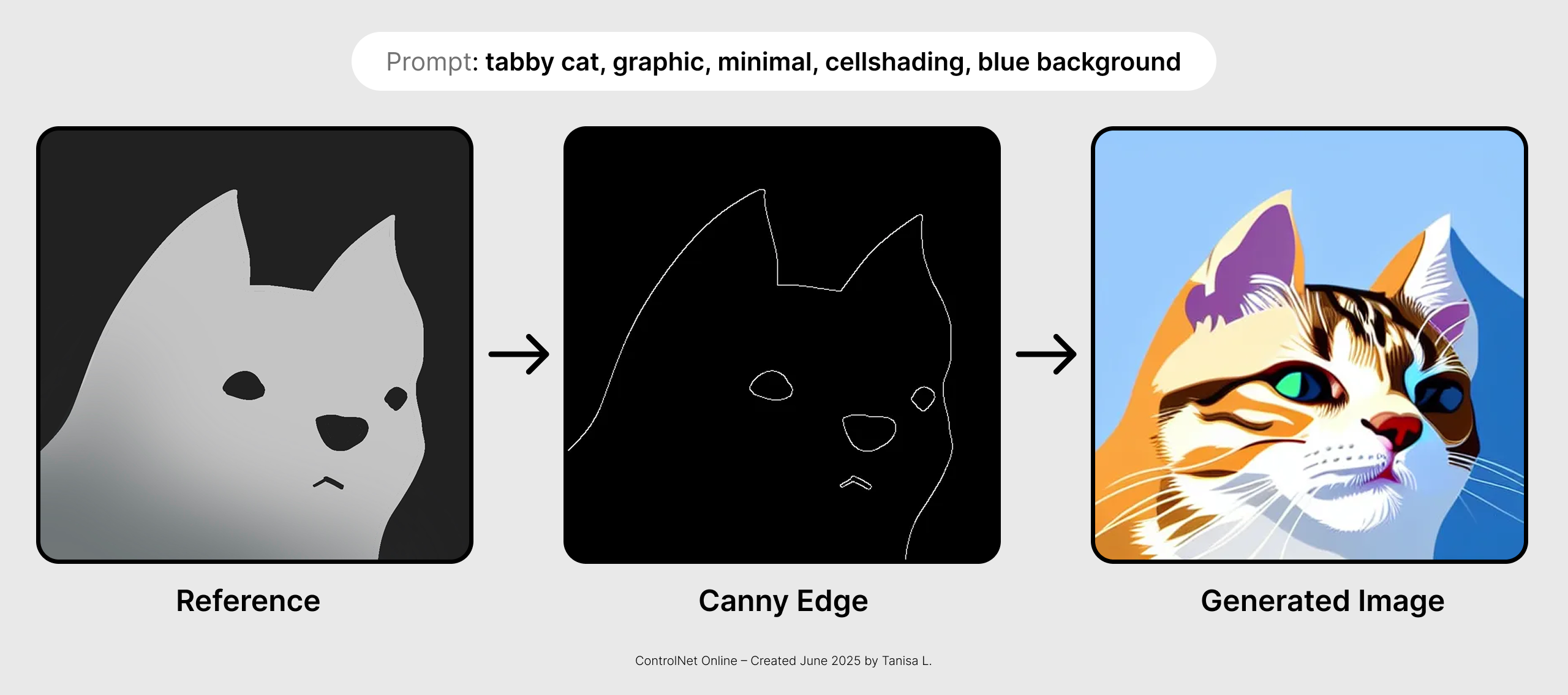

1. Output Alignment

This pattern empowers users with additional axes of input to overcome natural language limitations. Certain aspects like composition, stylistic nuance, or rhythm are difficult to articulate in words, so we design interfaces that let users supply examples the model can directly analyze.

This approach is especially impactful in image generation, where anchoring the model with reference visuals narrows the output space to better match the user's vision and make it far more likely to reach desired results in fewer steps.

The core HCI principle behind this pattern is expressivity: enabling users to express their intent with higher fidelity. This principle extends across creative content types, though the technical methods vary.

Examples:

- Stable Diffusion ControlNet uses Blur, Canny, or Depth maps to guide image generation.

- ControlNet OpenPose applies an advanced computer vision library for human pose detection, making it possible to control human poses in generations within the Stable Diffusion framework.

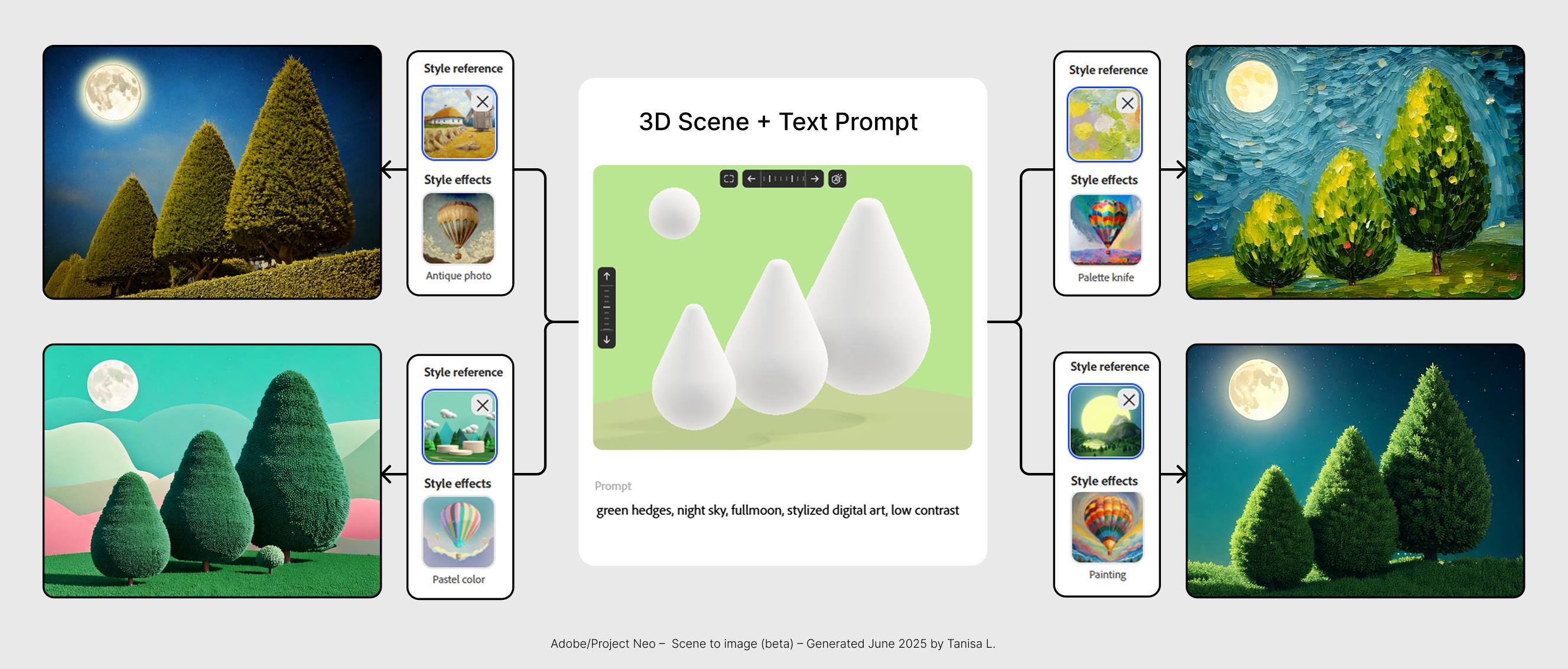

- Adobe Scene-to-Image generates images based on a 3D layout and style reference.

- OpenAI 4o’s Image Generation uses a process known as in-context learning to analyze and learn from user-uploaded images and integrate the details into image generation.

- Chat-based LLMs, by default, use in-context learning to find patterns in any examples provided in the user's prompt as references for style or structure.

Here’s an example of my hand-drawn reference translated to a Canny edge map to create visual guidance to generate a cat image following the same composition.

Another example is Adobe’s Scene-to-Image, where I combined various visual references to control the output’s composition, style, and color scheme. I used a simple 3D scene as the base and applied style references, like antique photos or palette knife painting, to produce different results with the same prompt and layout.

2. Pre-Defined Boundaries

I call this pattern Pre‑Defined Boundaries. Similar to setting ground rules with another person, the idea is to define constraints beforehand. Unlike prompts, which act as one‑time instructions within the workspace, these boundaries persist as rules that scope the entire space.

The core principle is to let users control which specific outputs an AI model should or should not produce. This improves predictability, reduces unpleasant surprises, and removes the need for users to repeat themselves.

Examples:

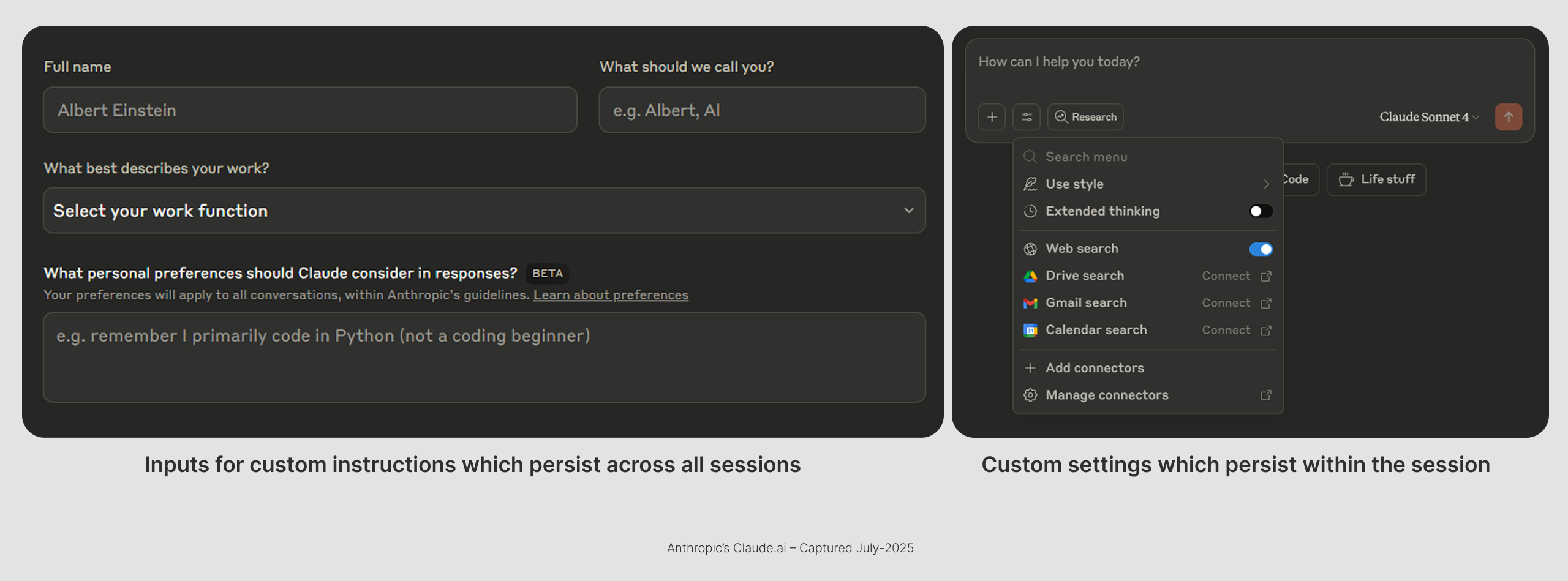

- ChatGPT and Claude allow users to add custom instructions in settings, influencing tone, output structure, or topic focus for all responses.

- ChatGPT Projects and Custom GPTs let users attach files and persistent instructions, which the model consistently references and prioritizes in every interaction across sessions.

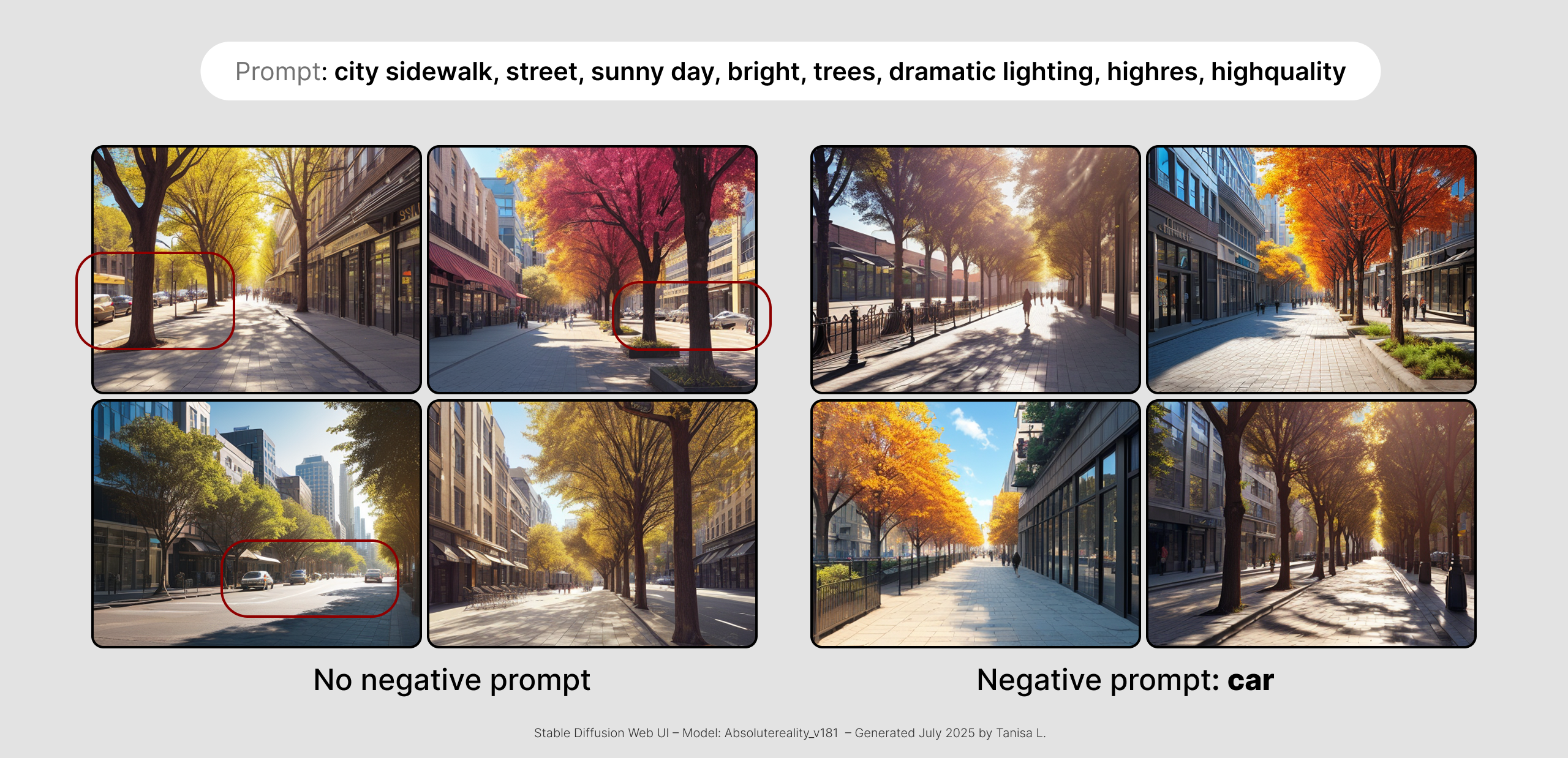

- Stable Diffusion Web UIs provide an optional Negative Prompt field, letting users specify elements to exclude from generated images. (Midjourney also supports negative prompts, but these are added to each prompt individually and do not persist as a pre-configured boundary.)

- ElevenLabs has a Dictionary feature that lets users define custom pronunciations for certain terms, like acronyms. This remains in effect across voice generations and ensures correct pronunciation, reducing unpredictable output.

Here’s an example of pre-defined boundaries in Claude.ai. Users can enter custom instructions that apply to all future conversations. Users can also enable or adjust custom settings like the response style for the current session, which persists until reconfigured.

Here's how negative prompts work in Stable Diffusion. When generating images of a city sidewalk, the model sometimes includes cars in the scene. This behavior is random and unpredictable. By adding “car” to the negative prompt field, we instruct the model not to include cars in the output. Many users keep permanent keywords such as “blurry” or “monochrome” in their negative prompt field to reduce unwanted results across generations.

Example of images generated with and without a negative prompt using Stable Diffusion

3. Active Memory

Technically built on the same mechanism as pre‑defined boundaries, but this pattern focuses on dynamically building and referencing shared information as a conversation or creative work progresses—much like how people create common ground through natural interaction.

The core concept is to create a sense of continuity and shared understanding. For a good experience, users must be able to decide what is stored, with full visibility and the ability to modify it easily. Explicitly disclosing how the stored information is used further promotes predictability and trust through system transparency.

- ChatGPT supports a Memory feature that automatically saves context from conversations with the user and can recall this information in future interactions.

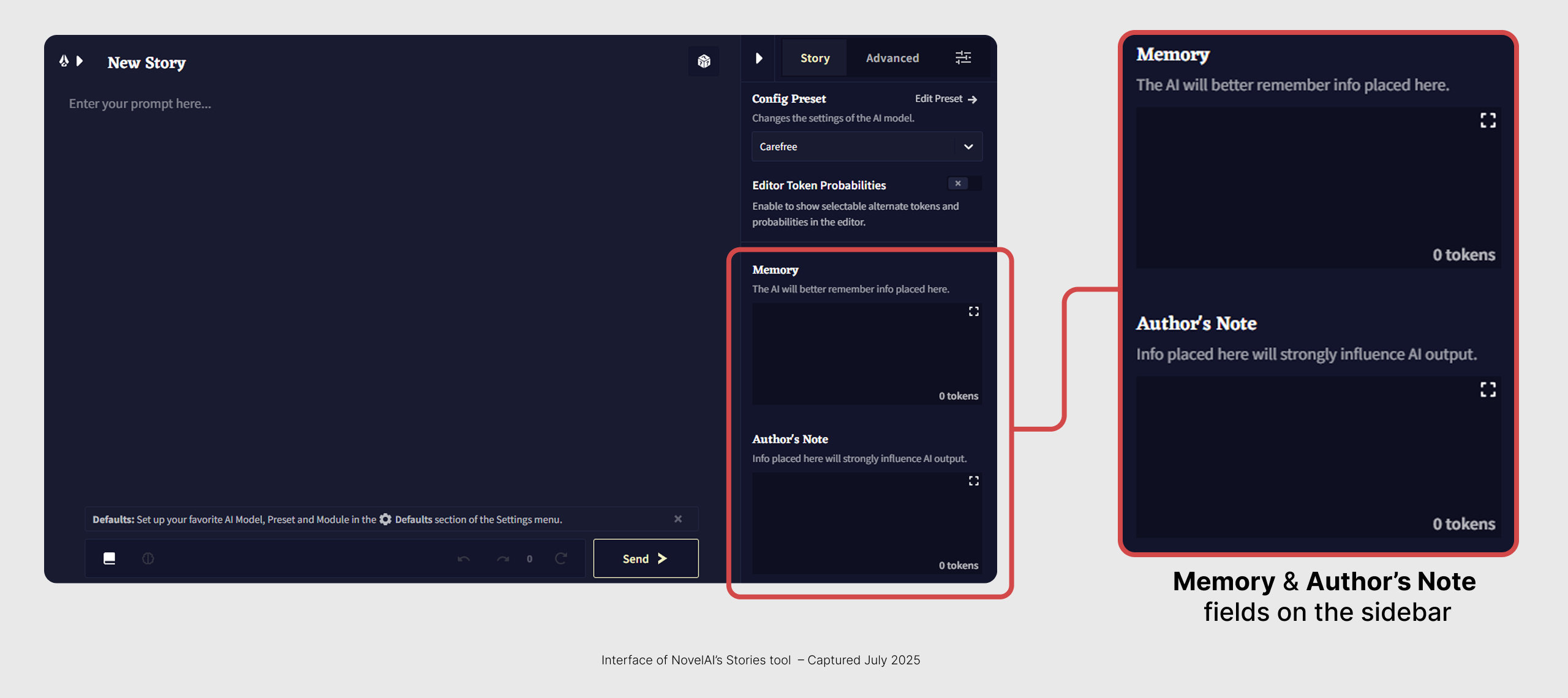

- NovelAI's Memory and Author's Note are referenced throughout a story writing session by the AI for narrative details, which allows the AI to follow context that might not be explicitly stated in the previous story text.

- Consistent Visual Elements involve carrying over specific elements or characters across generated images. Some Stable Diffusion-based apps enable users to train custom models through their LoRA model system, for consistent character appearance, though this currently requires extra training steps. We may see seamless memory features for image generation in the near future.

4. Contextual Real-Time Suggestions

Provide proactive, context-aware recommendations as the user interacts with the system. Suggestions and auto-complete are not new, but they require much better context-tuning in generative AI, where the creative space is broad and user intent is often specific. Poorly tuned suggestions can feel random or even become distracting clutter.

For example, many LLMs show prompt examples at the start of a conversation, which are often ignored because they nudge users toward irrelevant tasks, like requesting a brownie recipe when the real goal is refining academic writing.

LLMs, by design, are next-word prediction systems. They can leverage this capability to produce highly relevant text or code that can significantly boost productivity if timed right. The key is to offer suggestions only when the system has high confidence about the user's intent, and to offer them an option that the user can easily ignore.

Examples:

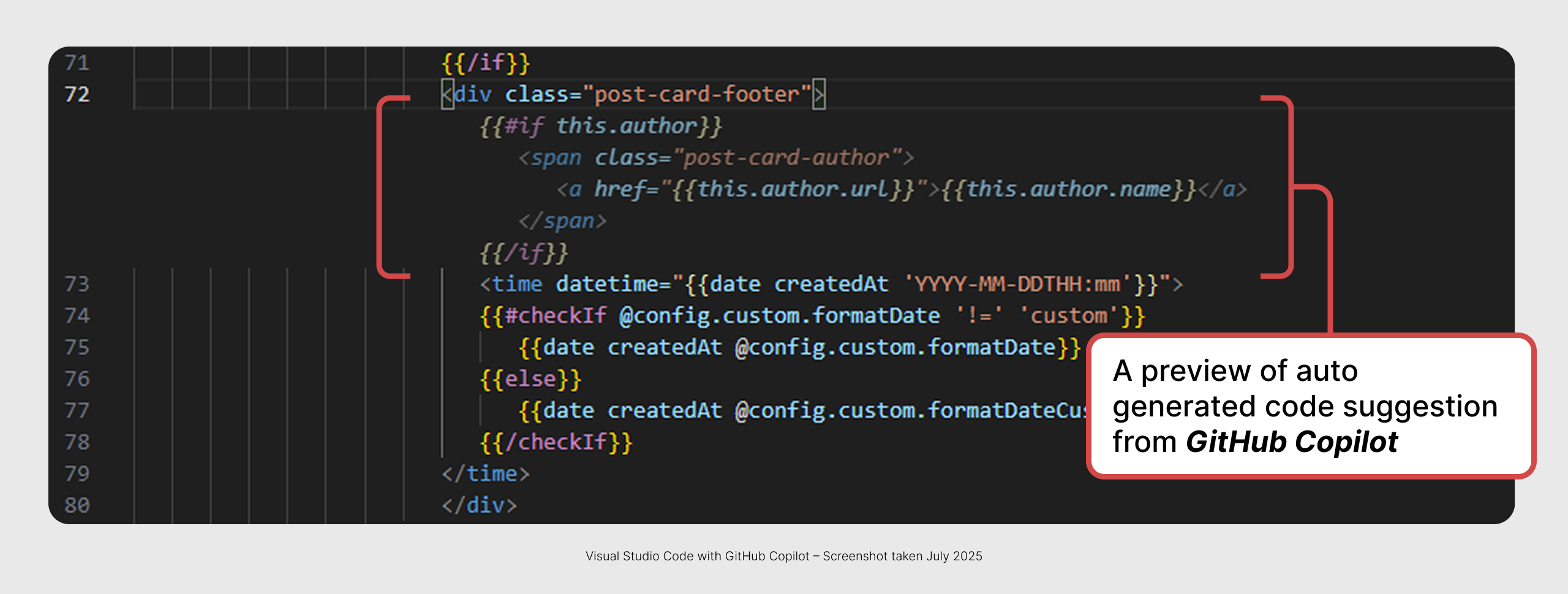

- GitHub Copilot offers inline code completions in editors like Visual Studio Code, with real-time reference to existing code and commonly used structures.

- Gmail Smart Compose provides sentence completions relevant to the subject and context, using commonly used email courtesy.

- Some LLM tools sometimes include a next-step prompt for the user at the end of their response, such as "Would you like me to create a printable PDF file of this letter?" The user can ignore this or respond with an affirming prompt, like "yes", to quickly trigger it.

Final thoughts

Natural language alone makes generative systems unpredictable and hard to control. Generative AI works best when paired with design patterns that support co‑creation.

I am fully aware that new patterns might emerge tomorrow, and the technology might improve enough to eliminate some of these problems entirely by next year. But human cognition will remain the same, and the core principles behind these patterns will stay relevant even as natural language interfaces evolve.

Glossary

- LLM (Large Language Model): A type of AI model trained on large amounts of text to generate human-like responses.

- GUI (Graphical User Interface): A visual interface that allows users to interact with a system using elements like buttons, icons, and menus, rather than text commands.

- NLP (Natural Language Processing): A field of AI that enables machines to understand, interpret, and generate human language.

- Mental Model: In UX terms, a user's internal understanding of how a system works based on their interactions with it.

- Anthropomorphize: To attribute human traits, emotions, or reasoning to non-human entities.

- Black Box: In the context of AI, a system whose internal mechanisms are not transparent or easily understood by humans.

- LoRA (Low-Rank Adaptation): A lightweight fine-tuning technique used in language and image generation that influences outputs without retraining the entire model.

References

- Agrawala, M. “Unpredictable Black Boxes Are Terrible Interfaces.” Substack, 2023. [Link]

- Clark, H. H., and Schaefer, E. F. Grounding in Communication. In Resnick, L. B., Levine, J. M., & Teasley, S. D. (Eds.), Perspectives on Socially Shared Cognition, American Psychological Association, 1989.

- Acclaro. “Localizing Websites for High vs. Low-Context Cultures.” 2023. [Link]

- Ting-Toomey, S. Communicating Across Cultures. Guilford Press, 1999.